I’m Hongzhe Bi, a second-year Master student advised by Professor Jun Zhu in the TSAIL, Department of Computer Science and Technology, Tsinghua University. I’m also co-advised by Zhizhong Su in Horizon Robotics. I graduated from Class of Artificial Intelligence, Beijing University of Posts and Telecommunications with a bachelor’s degree.

My Research Interests include General Embodied Intelligence, Cross-Embodied Robot Foundation Model and Bimanual Dextrous Manipulation.

Publications

General Embodied Intelligence

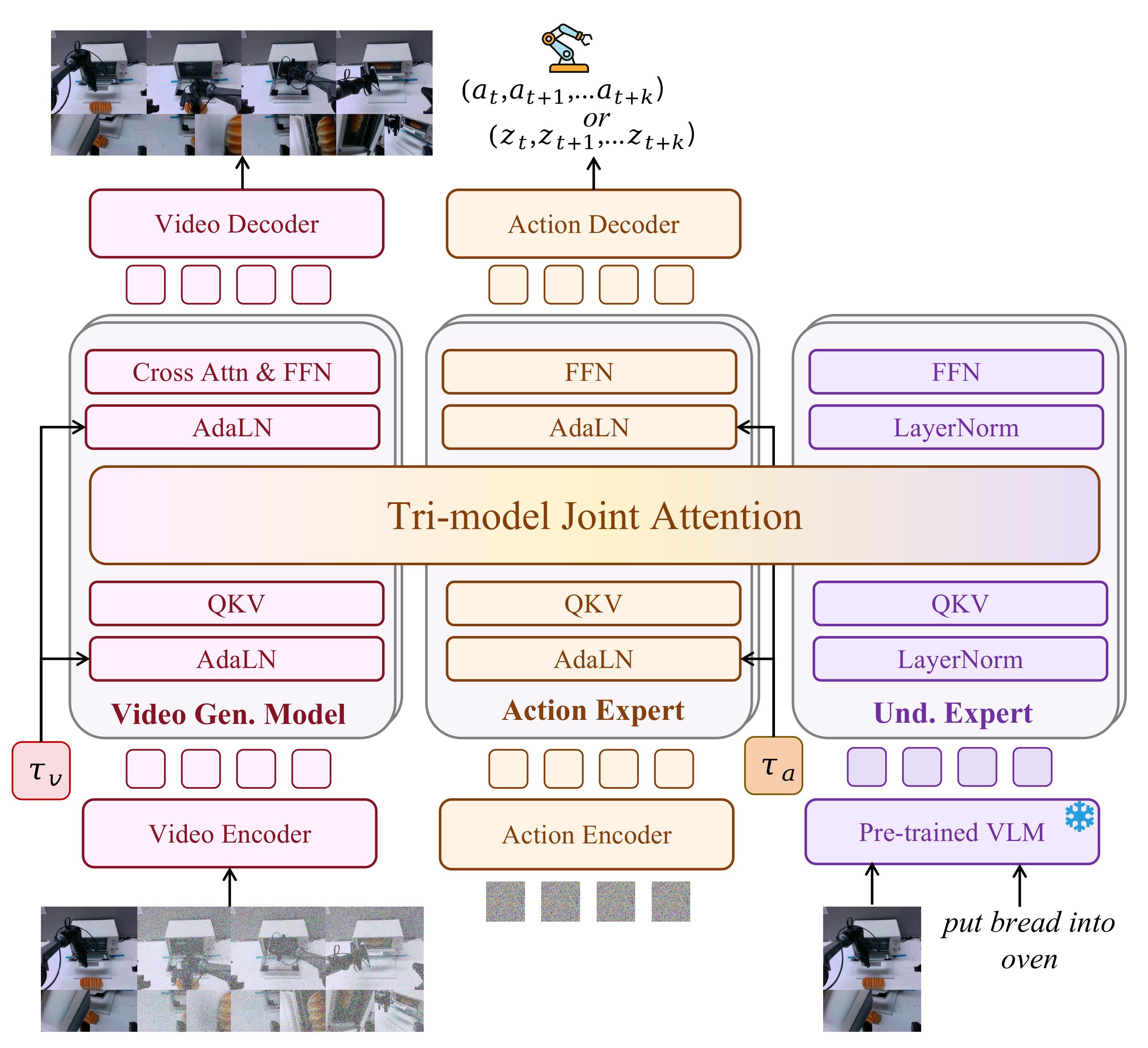

Motus: A Unified Latent Action World Model

Hongzhe Bi, Hengkai Tan, Shenghao Xie, Zeyuan Wang, Shuhe Huang, Haitian Liu, Ruowen Zhao, Yao Feng, Chendong Xiang, Yinze Rong, Hongyan Zhao, Hanyu Liu, Zhizhong Su, Lei Ma, Hang Su, Jun Zhu

- Motus is a unified latent action world model that leverages existing pretrained models and rich, sharable motion information. Motus introduces a Mixture-of-Transformers (MoT) architecture to integrate three experts (understanding, action, and video generation) and adopts a UniDiffuser-style scheduler to enable flexible switching between different modeling modes (World Models, Vision-Language-Action Models, Inverse Dynamics Models, Video Generation Models, and Video-Action Joint Prediction Models). Motus further leverages optical flow to learn latent actions and adopts a three-phase training pipeline and six-layer data pyramid, thereby extracting pixel-level “delta action” and enabling large-scale action pretraining.

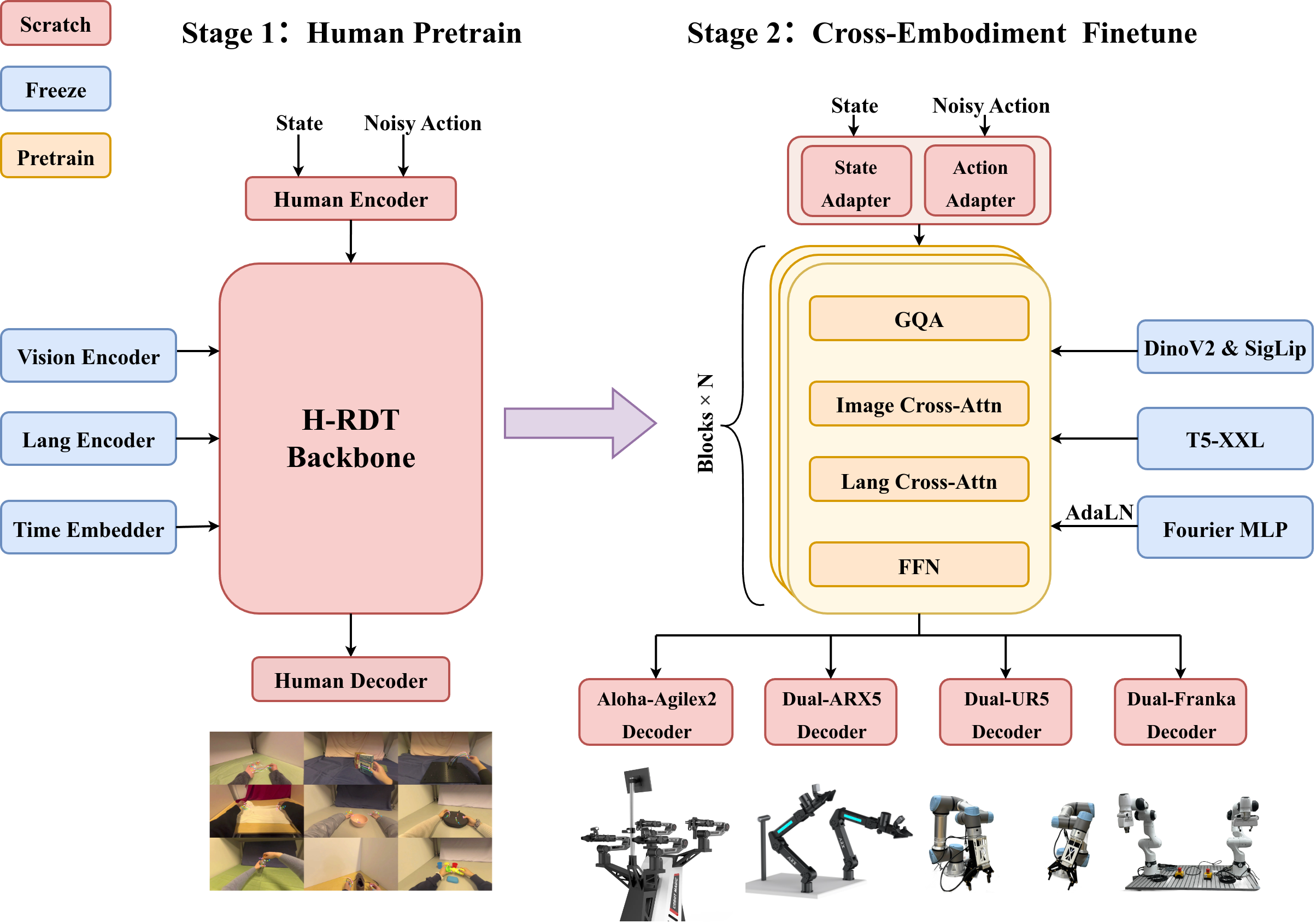

H-RDT: Human Manipulation Enhanced Bimanual Robotic Manipulation

Hongzhe Bi, Lingxuan Wu, Tianwei Lin, Hengkai Tan, Zhizhong Su, Hang Su, Jun Zhu

- H-RDT (Human to Robotics Diffusion Transformer) is a novel approach that leverages human manipulation data to enhance robot manipulation capabilities.

Other Directions

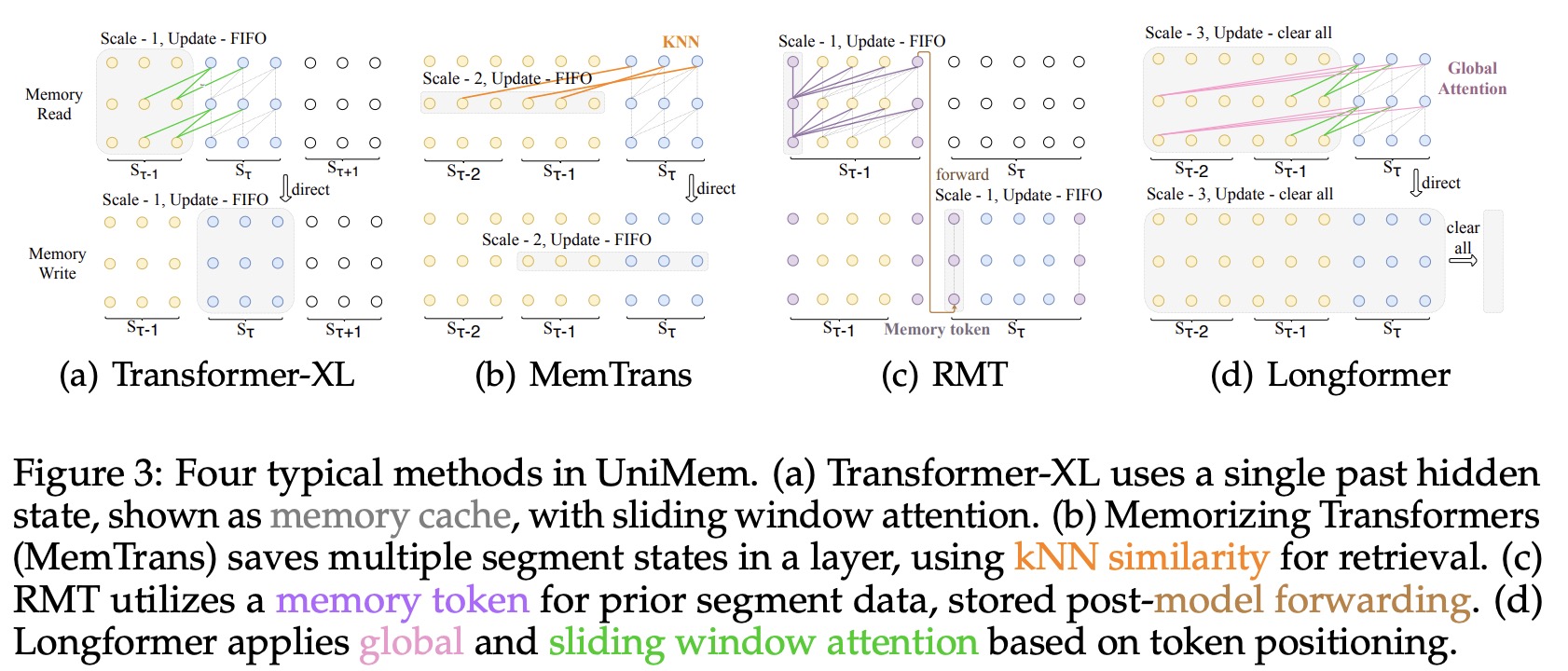

UniMem: Towards a Unified View of Long-Context Large Language Models

Junjie Fang, Likai Tang, Hongzhe Bi, Yujia Qin, Si Sun, Zhenyu Li, Haolun Li, Yongjian Li, Xin Cong, Yankai Lin, Yukun Yan, Xiaodong Shi, Sen Song, Zhiyuan Liu, Maosong Sun

- A Unified framework that reformulates existing long-context methods from the view of Memory augmentation of LLMs.

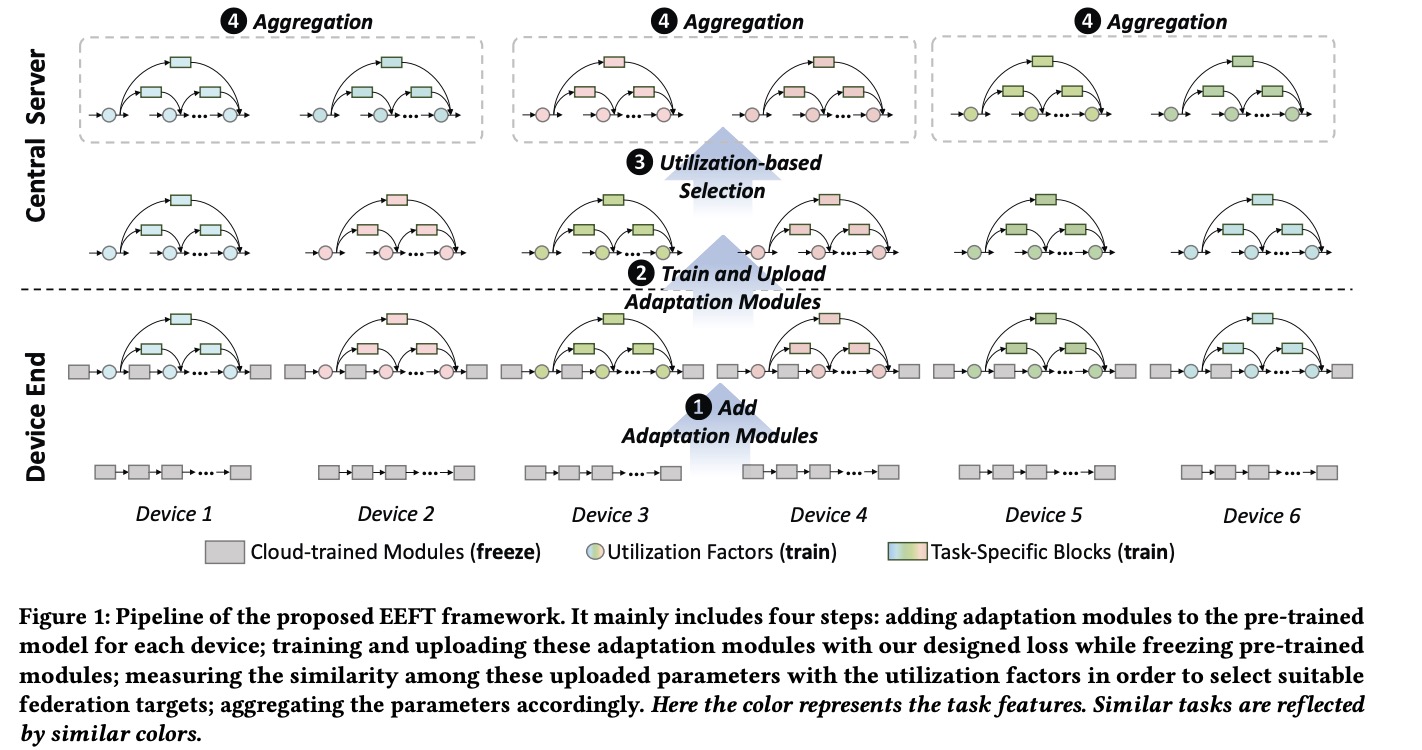

Beyond Fine-Tuning: Eficient and Efective Fed-Tuning for Mobile/Web Users

Bingyan Liu, Yifeng Cai, Hongzhe Bi, Ziqi Zhang, Ding Li, Yao Guo, Xiangqun Chen

- This paper extend the local-user fine-tuning to multi-user fed-tuning with the help of Federated Learning (FL).

Honors and Awards

- 2025.06 First place in Real-world Track and Second place in Simulation Track of RoboTwin Dual-Arm Collaboration Challenge@CVPR2025

Educations

- 2024.09 - now, Master, TSAIL, Department of Computer Science and Technology, Tsinghua University

- 2020.09 - 2024.06, Undergraduate, School of Artificial Intelligence, Beijing University of Posts and Telecommunications(BUPT)

- 2014.09 - 2020.06, High School, Beijing 101 Middle School

Internships

- 2024.10 - now, Horizon Robotics Lab

- 2024.04 - 2024.08, D-Robotics

- 2023.10 - 2024.01, ModelBest